I lose all data from jenkins every time I deploy it. And that’s OK.

Background: a rant

Let me start off by saying: I’ve done a lot of Jenkins.

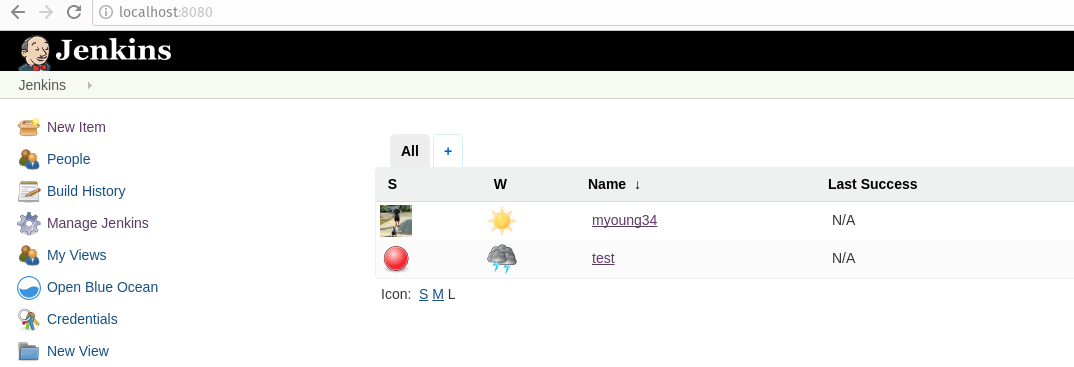

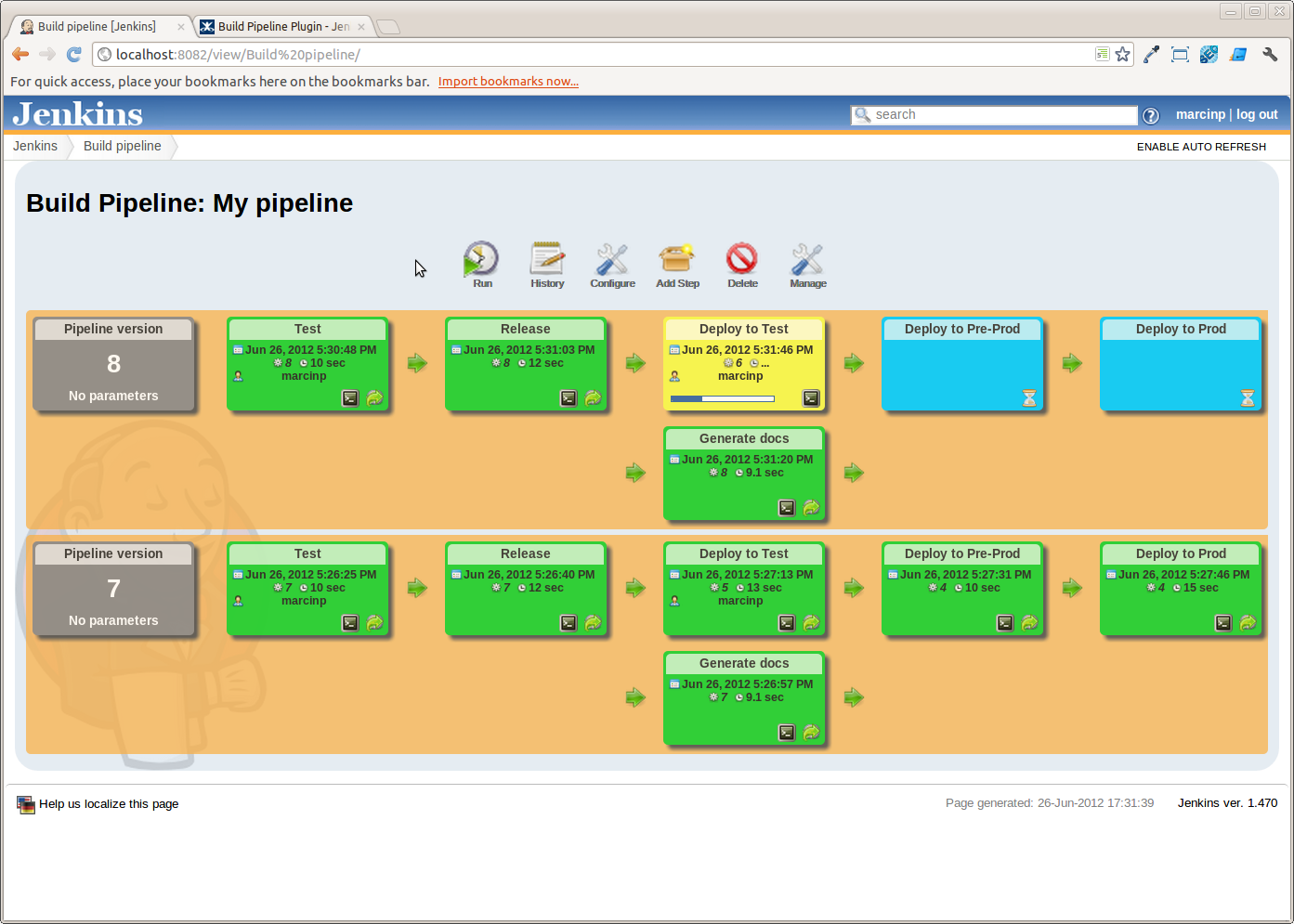

- Exhibit A (super old jenkins with fake pipelines):

I’ve done new Jenkins (I love 2.0 and love Jenkinsfile’s).

I’ve worked with large jenkins installs. I’ve worked with small jenkins at multiple shops.

I’ve automated backups with S3 tarballs:

1 2 3 4 5 6 7 8 9 10 | |

I’ve automated backups with thin backup.

I’ve automated creating jobs with job-dsl

I’ve automated creating jobs with straight XML.

I’ve automated creating jobs with jenkins job builder.

They all suck. I’m sorry. But they’re complex and require a lot of orchestration and customization to work.

Im gonna do worse

My problem with all these previous methods is they require a hybrid suck. You have to automate the jobs and mix that with retaining your backups.

I’m tired of things using the filesystem as a database.

These things are complex because the data lives in the same place as the jobs. When you build up your jobs they contain metadata about build history. So you end up with these nasty complicated methods of pulling down and merging the filesystem.

My hypothesis: #(@$ that. Lets use a real database and artifact store for the data and just not care. Crazy Right?!

I’ve got some demo code to prove to you how easy it is. It’s 100% groovy. No job dsl, no nothing. Just groovy and java methods. The one I posted shows how I automated groovy to set up:

- Active directory

- AWS ECS agents

- Github organizations (includes webhooks!)

- Slack

- Libraries like better slack messages and jobs defined in a java properties file

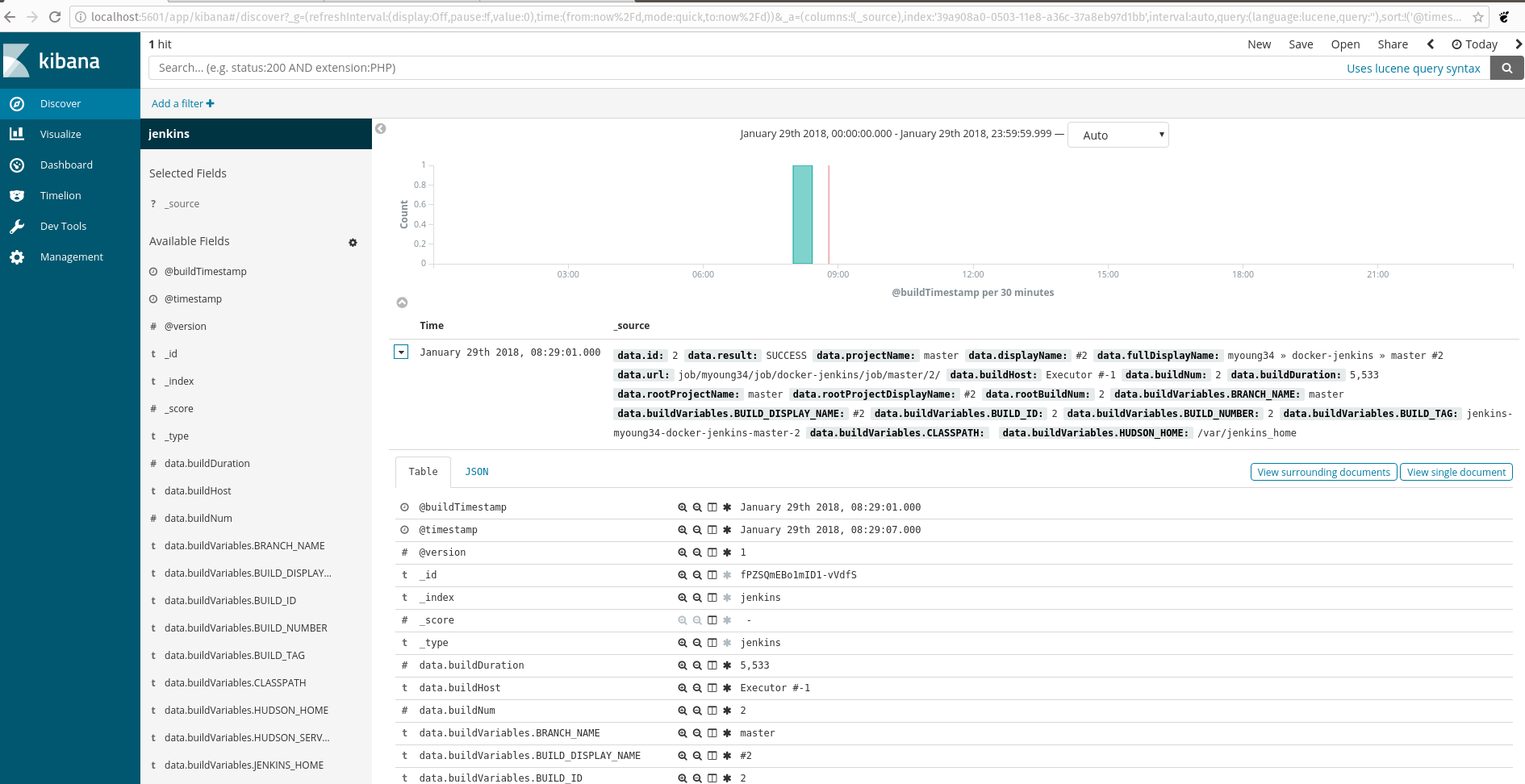

- Logstash for the real meat of this post

The real reason youre here

My jenkins master is docker. Its volatile. And its stateless.

So how do I do it? In production my docker hosts listen to UDP port 555 with Logstash for syslog and forward them to ELK (because SSL, and other reasons). In my example the logstash plugin just sends directly to elasticsearch. All the env vars are pulled from vault at run time and I get to manage the data like my other data from ELK.

My artifacts go to someting like artifactory.

And guess what? It’s fantastic. Automation is dead simple. And I put the data in a real database. which lets me do things like see build times across projects, see deployments, etc.